Path Tracing

Path Tracing using a CPU and a GPU Compute Shader

Path Tracing is a ray tracing method where rays are shot from each pixel in the camera towards the scene, and the light contributed from each hit is integrated over several hits and passes to form the final light color of each pixel.

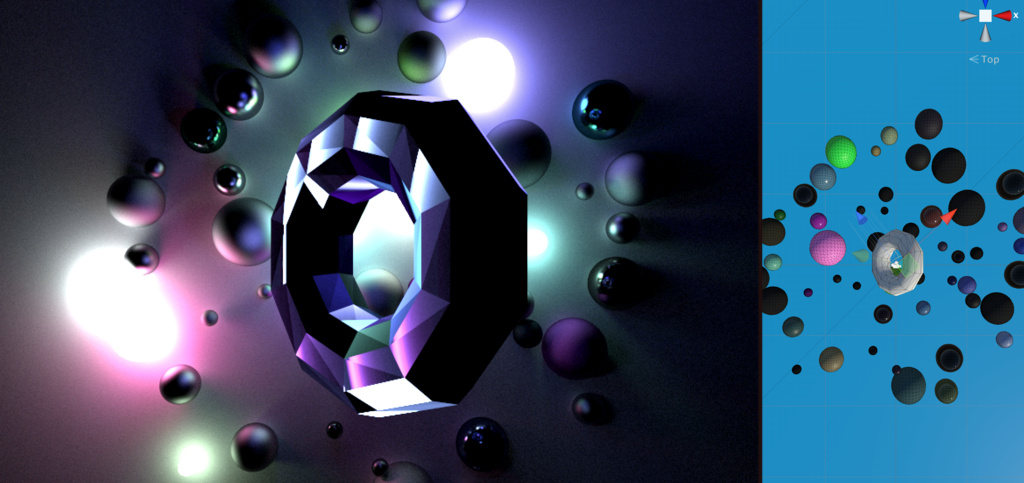

CPU Path Tracer

I started with a simple CPU based path tracer in Unity. In this path tracer, the scene consists of collision geometry with materials that define the color, smoothness, light amount, etc.

Each ray would be cast into the scene until it hit a collider. The collision would determine if it was a light or diffuse object. If it was a light it would return the pure color. If diffuse, the smoothness is checked and a secondary diffuse or reflect ray would be cast up to a total number of reflections per primary ray cast. Each primary and secondary ray would contribute to the lighting for a pixel for that pass. Multiple passes are performed and integrated to form a final image.

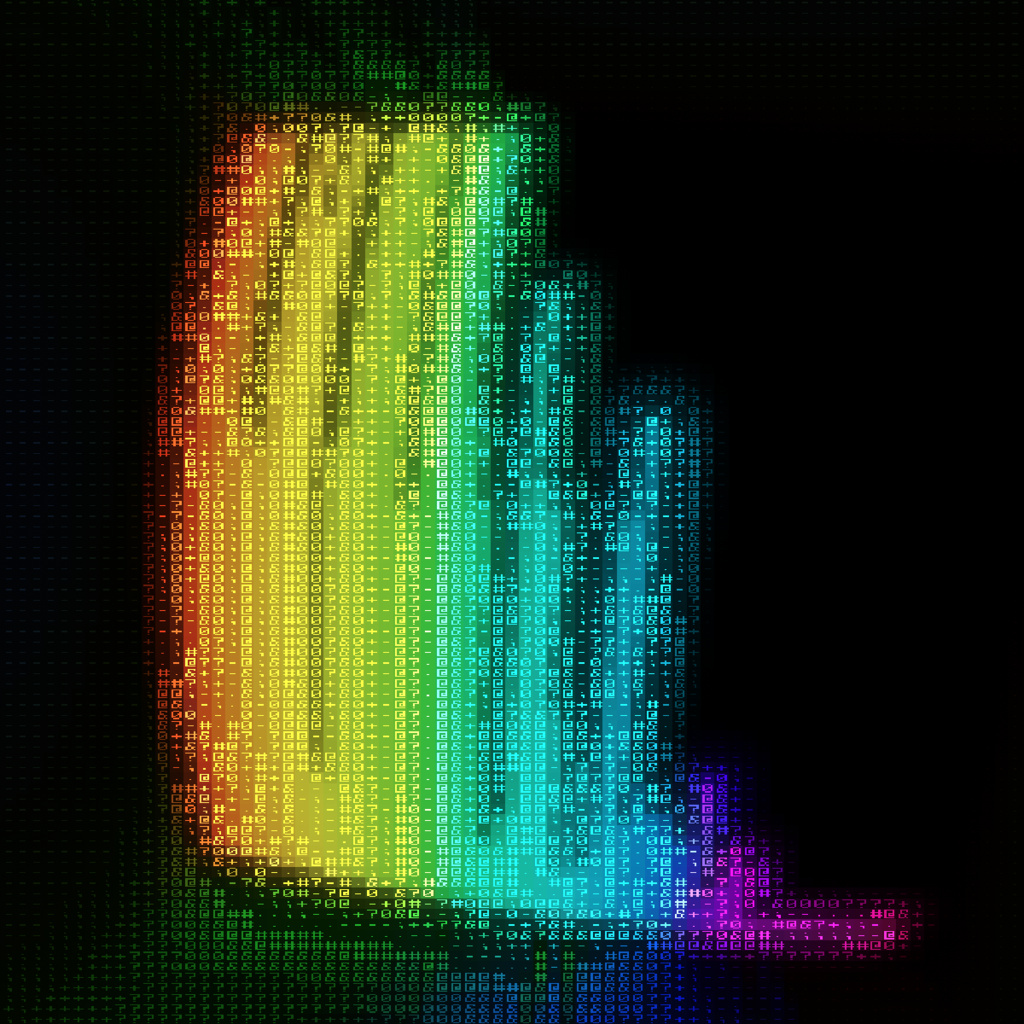

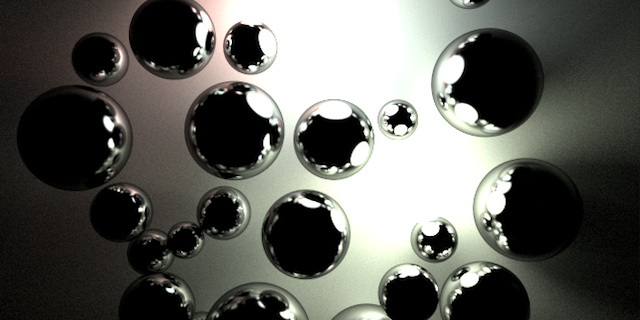

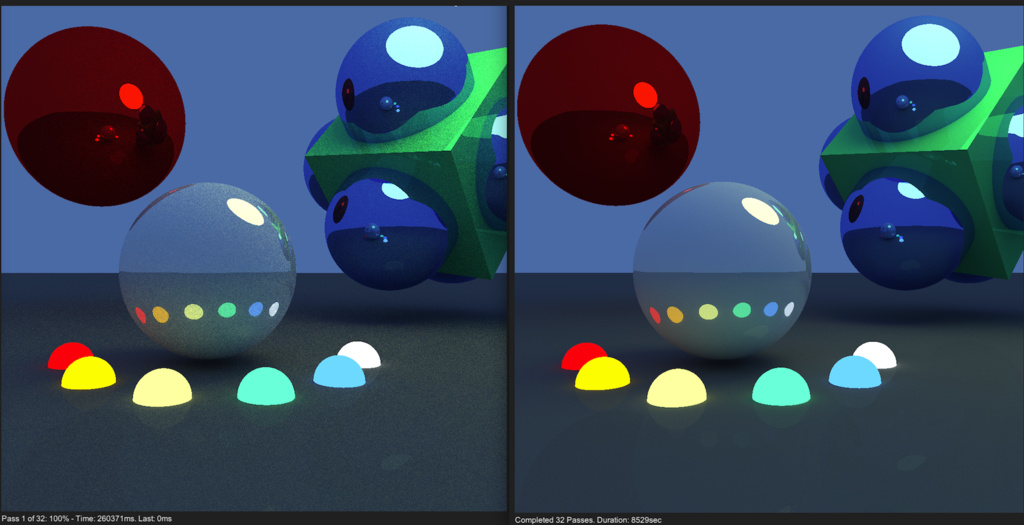

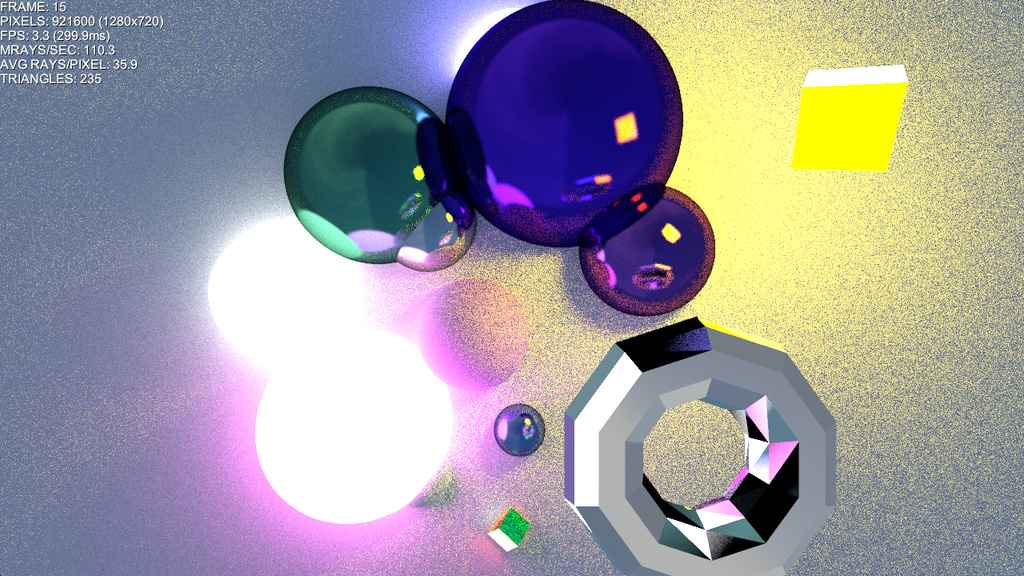

The final image was pretty and crisp, it just took a really long time to generate. The example below shows the noisy first frame, and the final result after 32 passes, which took about 2.4 hours.

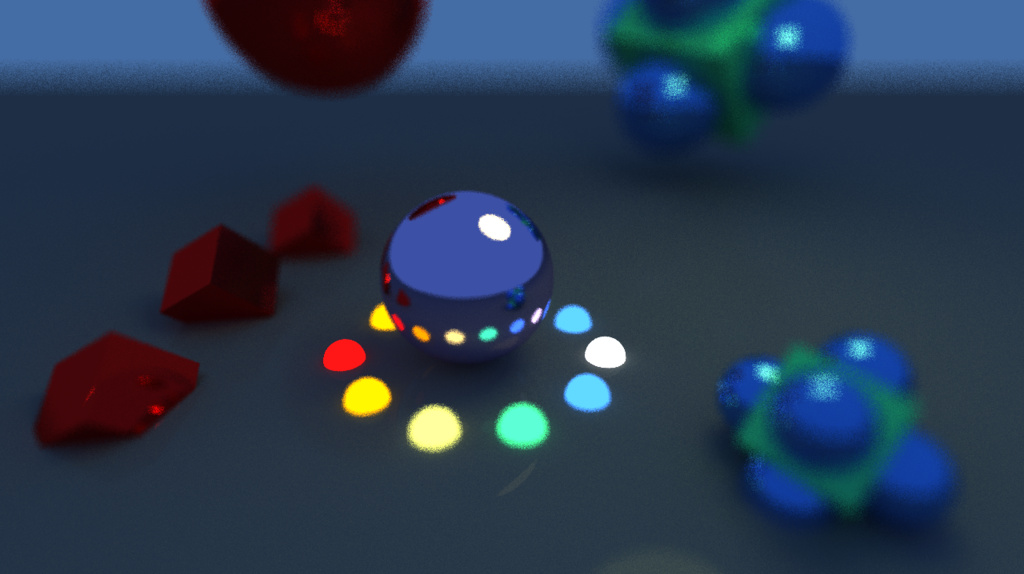

Depth of field was achieved by jittering the start position on the camera’s focal plane for the primary raycast.

The code for the CPU Path Tracer is pretty simple and produces pleasing results. The main drawbacks are the really slow render times (4-5 minutes per pass on a high quality frame), and challenging scene setup since the path tracing camera didn’t line up 1:1 with the normal Unity scene camera.

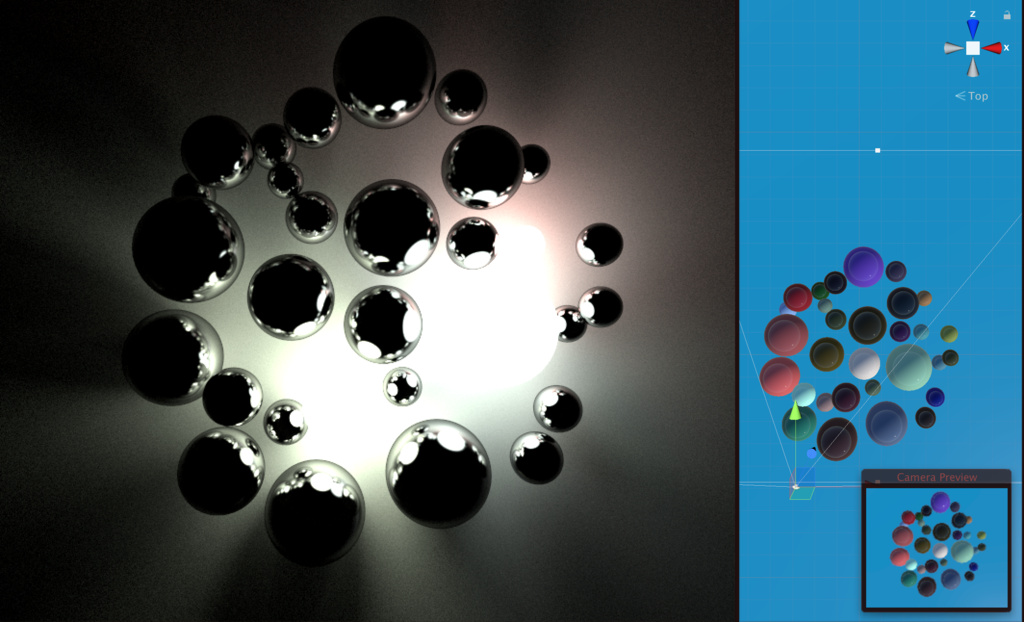

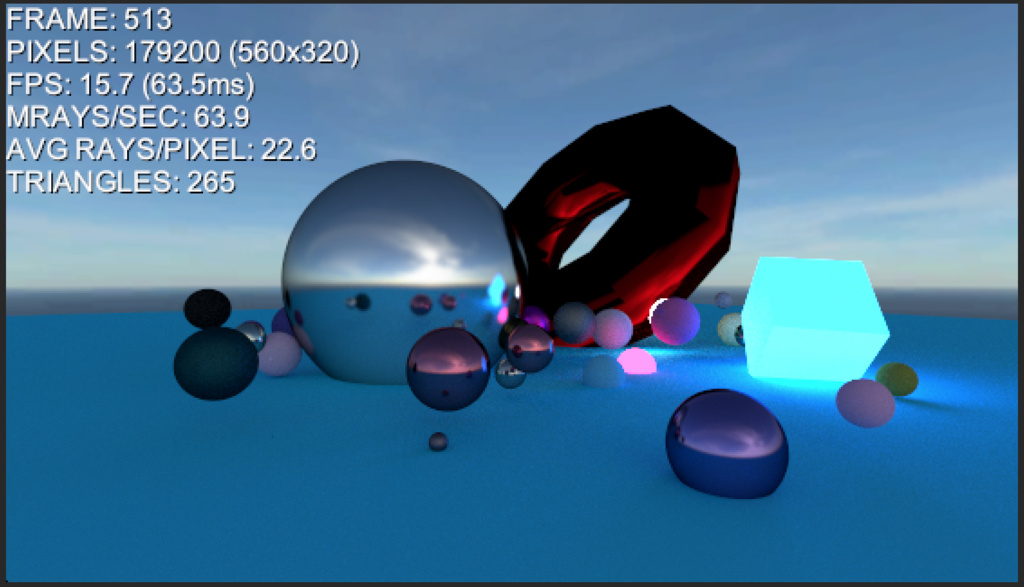

GPU Path Tracer

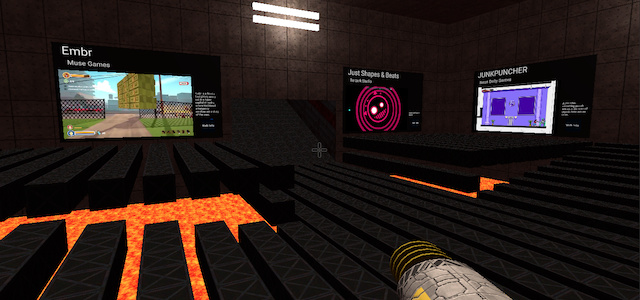

Since Path Tracing is a highly parallel problem, in theory it should work well on the GPU as a compute shader. In this version, the spheres and triangles that comprise the scene are dispatched to a compute shader to handle the path tracing. The result of the compute shader is then merged with the screen image to display a more refined image. The GPU path tracer is much faster than the CPU version, achieving 10-15fps with a small window (using an AMD Vega56).

An improvement over the CPU version is that the collision checks are performed against the geometry (spheres or triangles) and better matches the scene when looking through the built in Unity Camera.

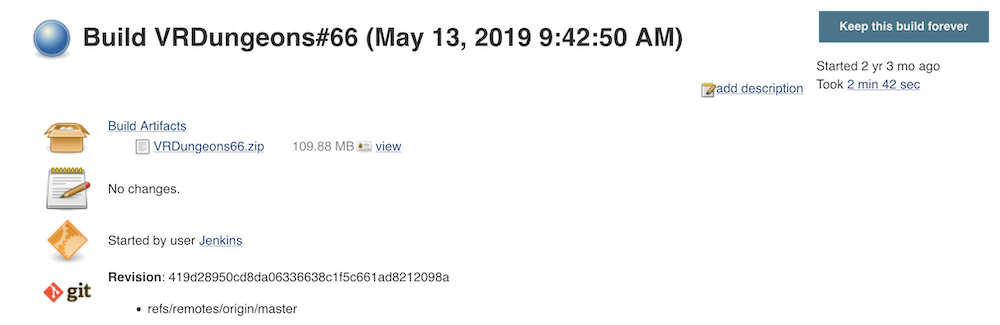

The GPU profiling is pretty non-existent on a MacOS with Unity since the external GPUs are not supported by the Unity or AMD’s GPU profiler. Intel’s profiler ran, but didn’t give much meaningful data or insight into where my GPU usage could be improved. Rudimentary performance data may be returned embedded within the image data as number of rays cast for each frame. This number may then be turned into a rate giving a MRays/sec (million) measurement, which is a general benchmark for if optimizations are helping or hurting.

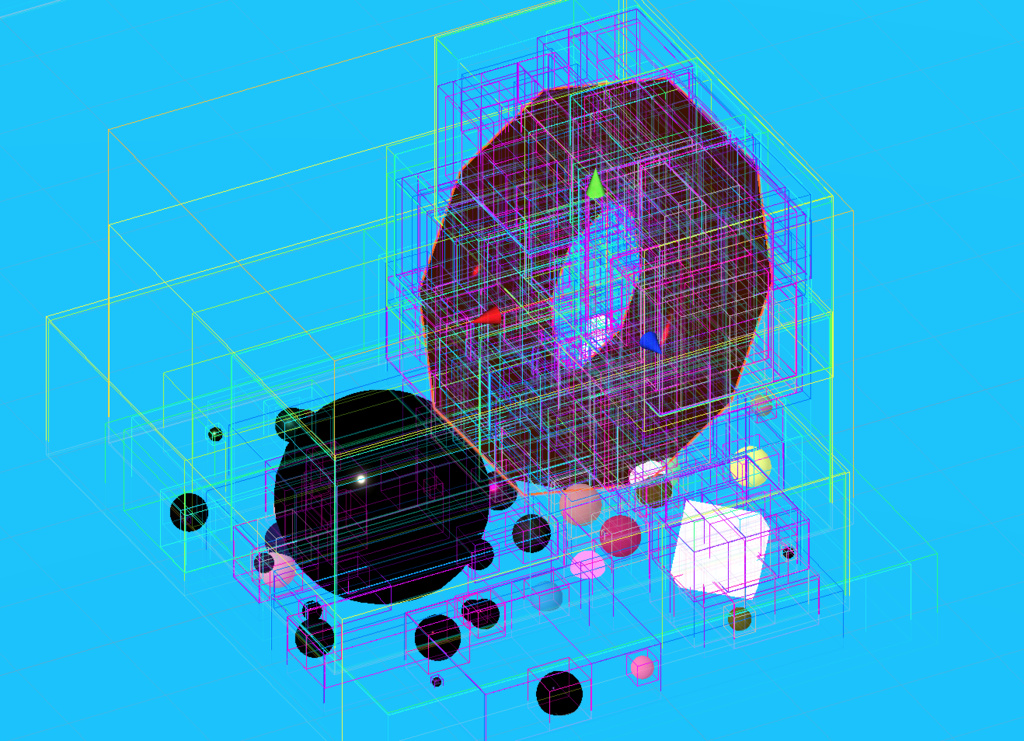

As the scene gains complexity, the number of triangle intersections per ray becomes a bottleneck. Also, the first group of primary rays are pretty well clustered and should adhere to the memory cached for each thread group. However, the secondary rays scatter and then cache misses become an issue.

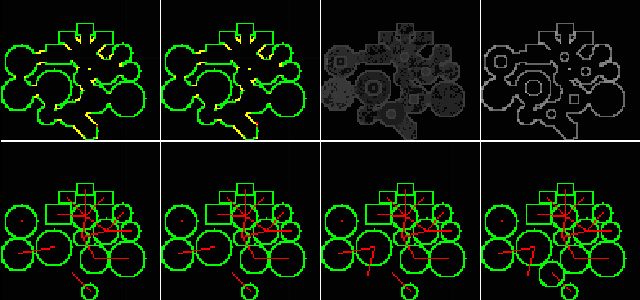

One idea for cutting down on the raw ray to triangle checks is to break the scene into a bounding volume hierarchy (BVH). The BVH allows for each ray to perform a few simple AABB checks as gets closer to the target triangle.

There is still more work to be done on this project. The BVH needs to be better implemented in the compute shader and some optimizations need to be made to better cluster secondary rays.

Hope you found this article on path tracing interesting,

Jesse from Smash/Riot