VR Planets

Procedural Planet Generation for VR

This article discusses adapting the Procedural Planet generation into VR using an HTC Vive.

The biggest challenge with porting the Procedural Planet generation algorithm into VR was getting the generation to fit within an 11ms frame.

My original method of generation would simply stall the main thread for a few hundred milliseconds during scene / level load, which wasn’t felt by the player. However, regenerating planets in a VR environment has the additional challenge of not interrupting the steady 90fps. Any frame time misses are going to be felt, which results in a poor VR experience.

Went through a few different ideas before settling in on the final VR friendly planet generation implementation:

CPU generation on Main Thread (Stall): Simply stall the main thread for a few hundred milliseconds while the planet generation. This is terrible because the VR screen will go black during the stall and will impart a heavy VR sickness price.

CPU generation on Main Thread (Coroutines): Tried to break the generation up over many frames using coroutines. However, there are still a few steps that would go over the 11ms/frame budget which resulted in a non-smooth VR experience. The frame misses, jitter, and less responsive head tracking made for a poor VR experience.

GPU Compute Shader: The next idea was to push all of the planet texture / heightmap generation over to the GPU using a compute shader. The texture generation is a highly parallel problem and I thought this would be the end all solution. However, the generation time was still around 100ms after heavy optimization, which is a bit outside of the 11ms/frame budget.

GPU Compute Shader (Couroutines): Spreading compute shader generation work over multiple frames using coroutines was almost the answer, but at 12ms/frame it was still just outside the budget and didn’t leave any frame time for other tasks.

CPU generation on separate thread: The final answer was to simply push all of the generation over to a different worker thread. Unity is pretty grouchy about threading, and the big caveat is that any work performed off the main thread simply cannot call any Unity methods. The worker thread generates all of the raw texture and mesh data, and then the main thread uses that data to construct the planet meshes.

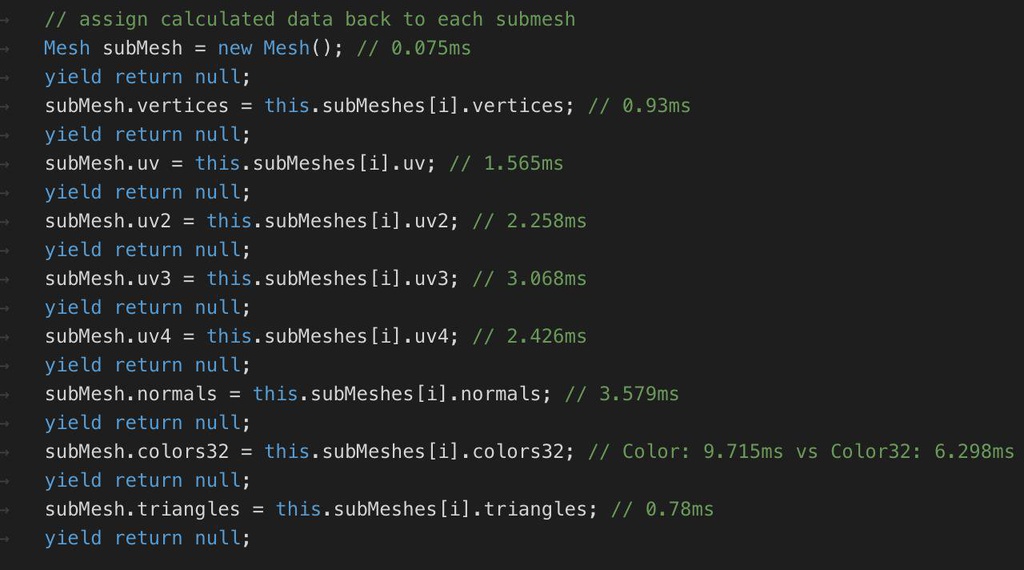

However, there was one last hurdle to overcome, and that was the amount of time it takes unity to construct the actual mesh object. Taking the mesh data from the worker thread and assigning the data back to a Unity Mesh object takes longer than the 11ms/frame budget. The performant answer is to assign the planet data to the mesh object using a coroutine that yields after each step. Also, note that using the colors32 structure of the mesh performs much better than simply using colors. See Mesh.colors32 documentation.

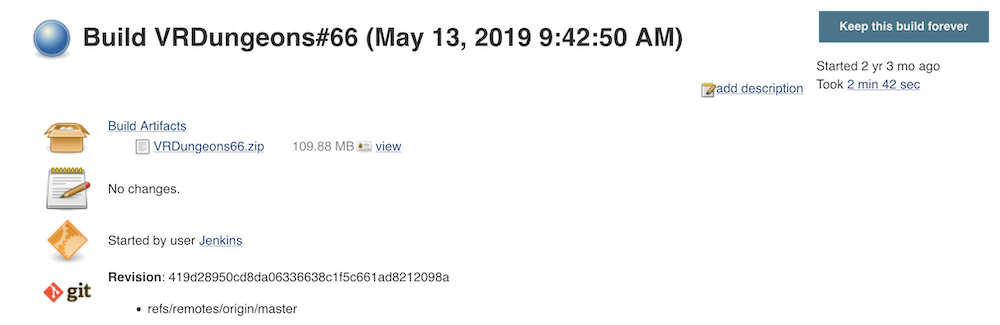

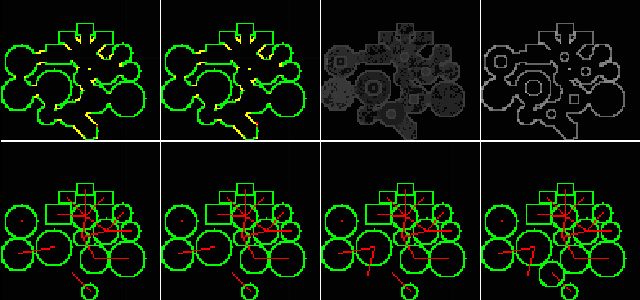

Here’s a screenshot of the time it takes to assign each part of a large planet mesh to Unity’s mesh:

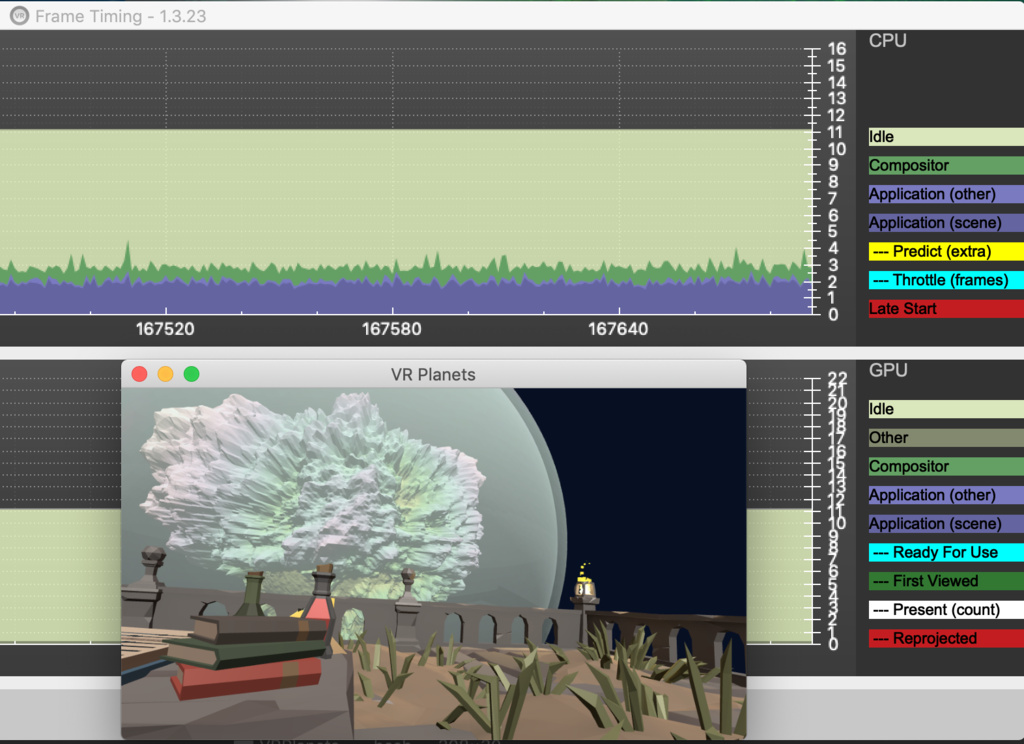

Spreading the final generation over a small amount of main thread frames is well within the VR frame budget and is very performant overall. The times above were taken in an editor build so the times are a little higher vs a compiled build. The performance graph below shows frame times during planet generation in a compiled build. Frame times hover in the 3-5ms/frame range, which results in a very smooth VR experience.

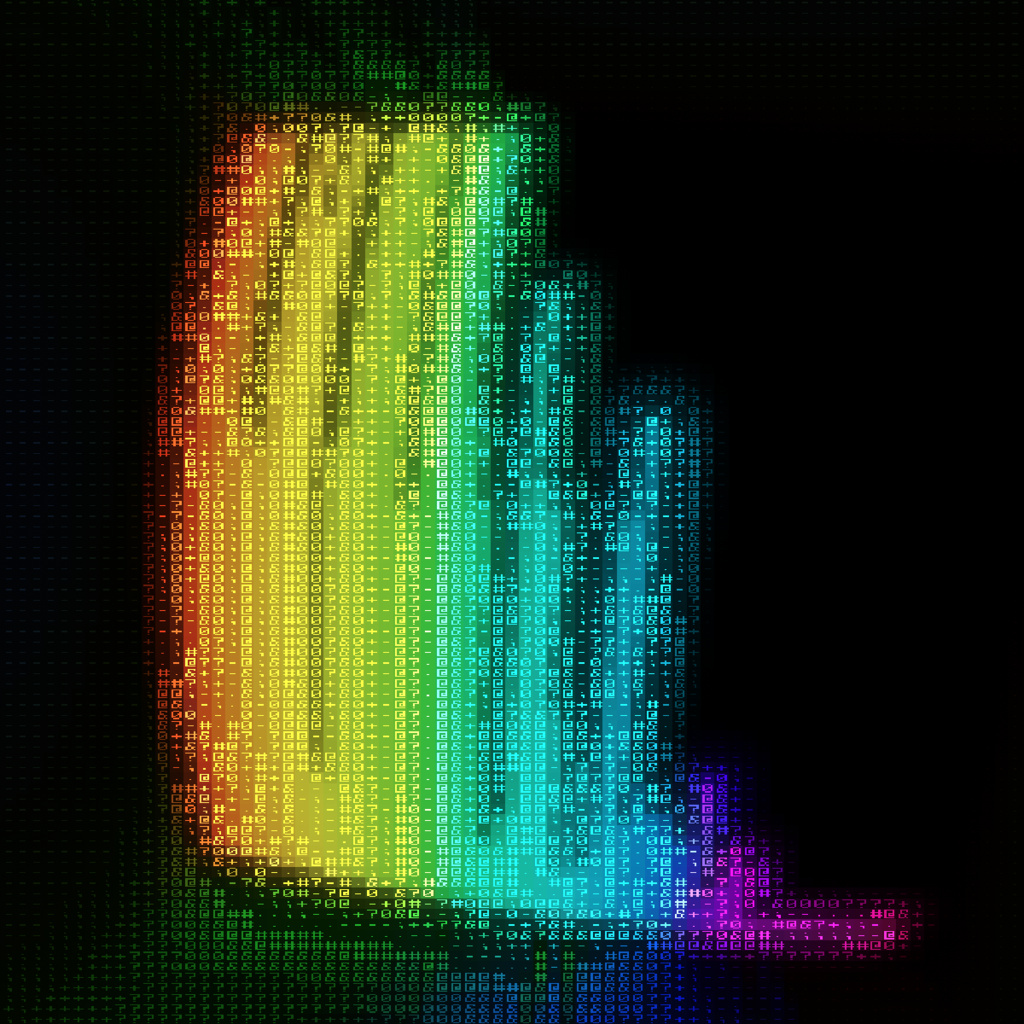

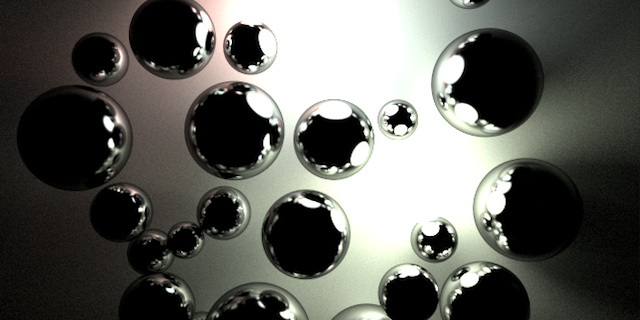

Here are a few examples of the planets as viewed in VR.

Currently, the toggle box controls planet generation. Throwing the die will generate a new set of planet parameters as seen in the book on the table. The parameters may also be adjusted using the book. Once generated, the planet may be moved and scaled using the Vive’s controller.

The planet generator is very performant and fairly robust, however there is a still a bit more work that I want to do on this project when I find a little more time.

Hope you found this article on adapting the procedural planet generation into VR interesting,

Jesse from Smash/Riot